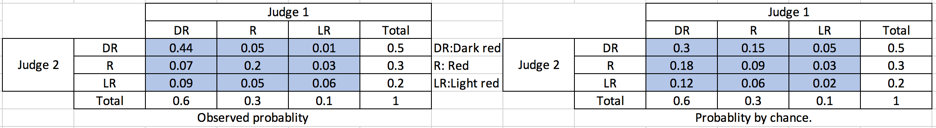

![Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh](https://datalabbd.com/wp-content/uploads/2019/06/15c-1.png)

Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh

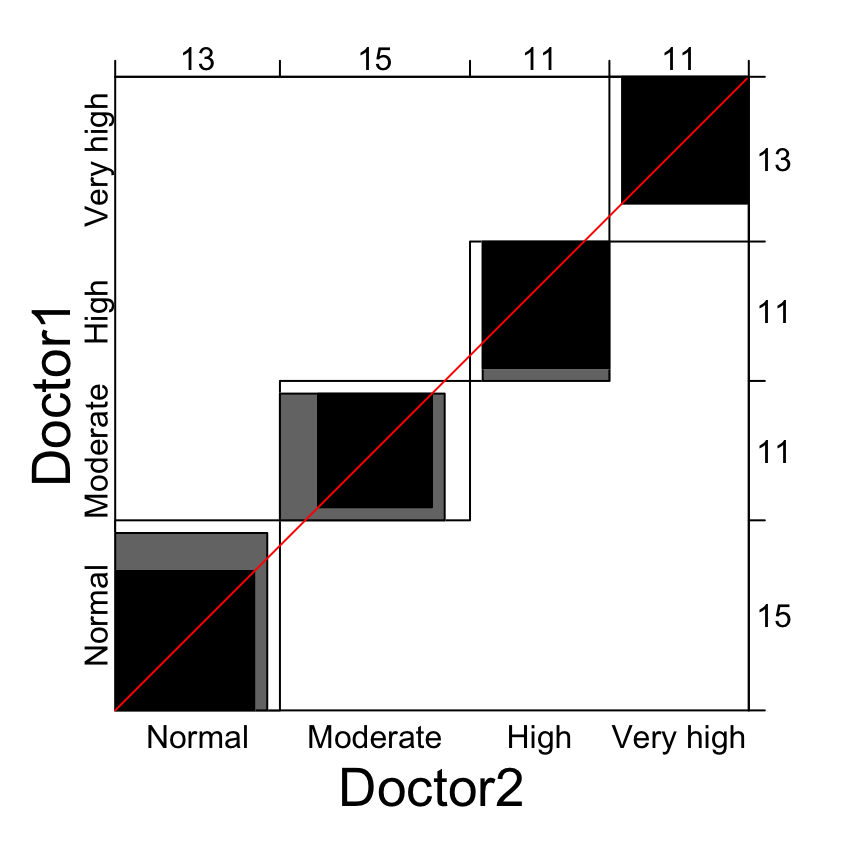

![Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh](https://datalabbd.com/wp-content/uploads/2019/06/15a-1.png)

Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh

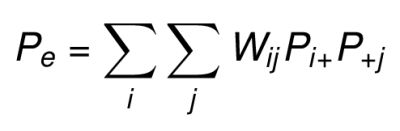

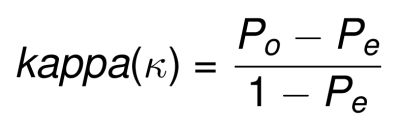

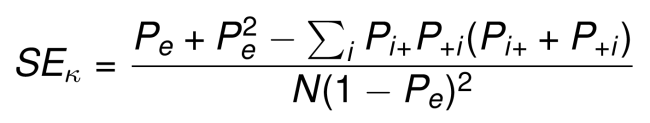

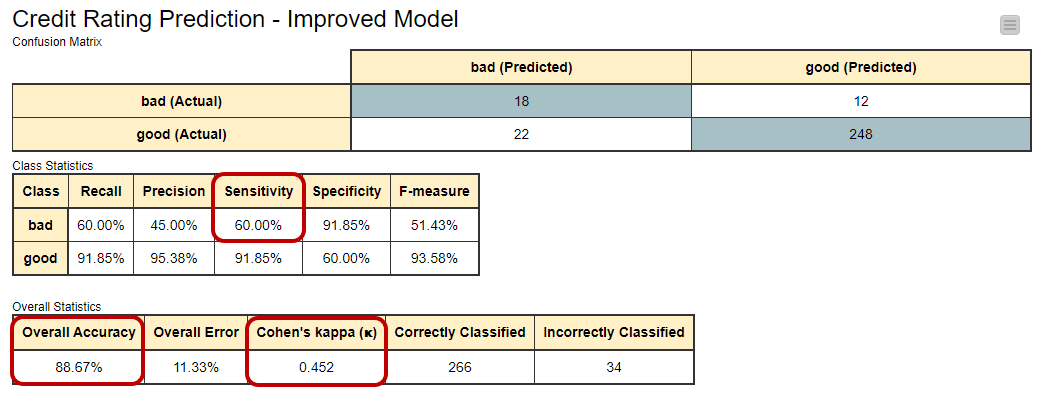

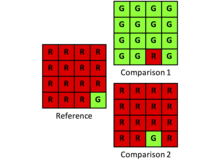

Kappa Coefficient for Dummies. How to measure the agreement between… | by Aditya Kumar | AI Graduate | Medium

![Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh Statistics Part 15] Measuring agreement between assessment techniques: Intraclass correlation coefficient, Cohen's Kappa, R-squared value – Data Lab Bangladesh](https://datalabbd.com/wp-content/uploads/2019/06/15b.png)

![PDF] Beyond kappa: A review of interrater agreement measures | Semantic Scholar PDF] Beyond kappa: A review of interrater agreement measures | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/7dc97e85652502ccfab37c549c671408ce94a94e/9-Table2-1.png)